Hardware and Software Implementation of Real Time Electrooculogram

Open Software/Hardware Platform for Human-Computer Interface Based on Electrooculography (EOG) Point Nomenclature

1

Institute of Medical Psychology and Behavioural Neurobiology, University of Tübingen, Silcherstraße five, 72076 Tübingen, Germany

two

Wyss-Middle for Bio- and Neuro-Engineering, Chemin des Mines nine, Ch 1202 Geneva, Switzerland

*

Writer to whom correspondence should be addressed.

Received: 21 February 2020 / Revised: 21 Apr 2020 / Accepted: 23 April 2020 / Published: 25 April 2020

Abstract

Electrooculography (EOG) signals have been widely used in Human-Computer Interfaces (HCI). The HCI systems proposed in the literature make use of self-designed or closed environments, which restrict the number of potential users and applications. Here, we present a arrangement for classifying four directions of center movements employing EOG signals. The system is based on open source ecosystems, the Raspberry Pi single-board estimator, the OpenBCI biosignal conquering device, and an open up-source python library. The designed organization provides a cheap, compact, and easy to carry system that can exist replicated or modified. Nosotros used Maximum, Minimum, and Median trial values as features to create a Back up Vector Machine (SVM) classifier. A mean of 90% accuracy was obtained from vii out of x subjects for online classification of Up, Downwards, Left, and Right movements. This classification organisation can exist used as an input for an HCI, i.due east., for assisted advice in paralyzed people.

1. Introduction

In the past few years, we have seen an exponential growth in the evolution of Man-Calculator Interface (HCI) systems. These systems have been applied for a wide range of purposes similar decision-making a computer cursor [1], a virtual keyboard [two], a prosthesis [three], or a wheelchair [4,5,six,7]. They could too exist used for patient rehabilitation and advice [eight,nine,10,11]. HCI systems can brand utilise of unlike input signals such as voice [7], electromyography (EMG) [12], electroencephalography (EEG) [13], about-infrared spectroscopy (NIRS) [14,15,16] or electrooculography (EOG) [5].

In this newspaper, we describe an EOG classification organization capable of accurately and consistently classifying Upwardly, Down, Left, and Correct heart movements. The system is modest, easy to carry, with considerable autonomy, and economical. Information technology was developed using open up hardware and software, non but because of economic reasons, but also to ensure that the system could reach as many people as possible and could be improved and adapted in the future by anyone with the required skills.

The end goal of this work is to build a system that could be easily continued to a communication or movement assistance device like a wheelchair, any kind of speller application, or only a computer mouse and a virtual keyboard.

To attain these objectives, we have developed and integrated the lawmaking needed for:

-

Acquiring the Electrooculography (EOG) signals.

-

Processing these signals.

-

Extracting the point features.

-

Classifying the features previously extracted.

EOG measures the dipole direction changes of the eyeball, with the positive pole in the front [17]. The technique of recording these potentials was introduced for diagnostic purposes in the 1930s by R. Jung [18]. The presence of electrically active nerves in the posterior role of the eyeball, where the retina is placed, and the front part, mainly the cornea, creates the difference in potential on which EOG is based [19]. This creates an electric dipole between the cornea and the retina, and its movements generates the potential differences that we can tape in an EOG.

At that place are several EOG HCI solutions present in the literature. One of the bug with current HCI systems is their size and lack of autonomy, the employ of proprietary software, or beingness based on self-designed acquisition and processing devices. Regarding the acquisition arrangement, the most common arroyo is to apply a cocky-designed acquisition device [ane,four,xx,21,22]. In our view, this solution dramatically restricts the number of users who can adopt this system. Other proposed systems make utilise of commercial amplifiers [23,24], which in plow make use of proprietary software and require robust processing systems, mainly laptops. This as well reduces the number of potential users of the system and its applications since it increases the cost of the system and reduces its flexibility, portability, and autonomy. As far as betoken processing is concerned, most systems choose to use a laptop to deport out these calculations [1,xx,21,22,24,25], but we can also find the use of self-designed boards [half-dozen,26]. Table 1 shows the characteristics of some solutions present in literature as a representation of the current state of the art. The goal of our work is to accomplish results equivalent to the present state of the art using an open paradigm, demonstrating that it is possible to get in at a solution using cheaper components that could be modified to build a tailored solution. As far equally we know, this is the first time that an open system is presented in this scope.

In our system, the indicate is caused using the OpenBCI Cyton Lath (Raspberry Pi 3B+ official website), a low-toll open software/hardware biosensing device, resulting in an open hardware/software-based system that is portable, with considerable autonomy and flexibility.

Once we have the EOG bespeak, this is processed using a Raspberry Pi (OpenBCI Cyton official website), a unmarried lath computer that allows installing a Linux-based distribution, which is small, cheap, and gives us the pick to use non-proprietary software.

Features are then extracted from the caused betoken and classified employing a machine learning algorithm. The feature extraction process aims to reduce the dimensionality of the input data without losing relevant data for classification [28] and maximizing the separation between elements of different classes by minimizing it betwixt elements of the aforementioned class [27]. To achieve this, several models accept been proposed on EOG feature extraction [29,thirty,31,32]. We employed Back up Vector Machine (SVM) to allocate the data [33,34], which creates a purlieus to carve up the given information points into two unlike groups.

The result of this process, in the context of signal (EOG) mentioned in this commodity, is the classification of the subject field's center motility to be used equally input commands for further systems. This process and the tools used for it are explained in detail in Section 2. The Section iii shows the performance achieved by the system. Finally, in the Section four, nosotros talk over the designed system and compare our system with existing related work along with the limitations of our system and future piece of work.

two. Materials and Methods

2.1. Hardware-Software Integration

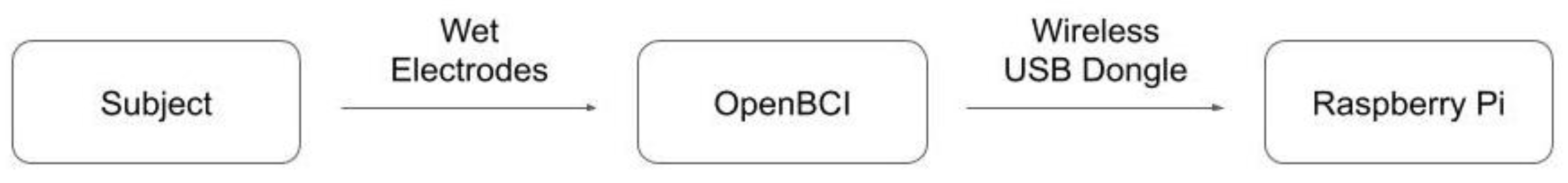

In the nowadays written report, OpenBCI Cyton lath was used for the signal acquisition. This board contains a PIC32MX250F128B microcontroller, a Texas Instruments ADS1299 analog/digital converter, a indicate amplifier and an eight-channel neural interface. This device is distributed past OpenBCI (USA). Figure 1 depicts the layout of the system.

This device gives united states enough precision and sampling rate (250 Hz) for our needs, it has an open-source surround (including a Python library to piece of work with the boards (OpenBCI Python repository)), it has an active and large customs, and information technology can be powered with a power banking company, which is a low-cal and mobile solution. Fastened to the board, nosotros accept 4 wet electrodes connected to two channels on the lath in a differential mode. Differential way computes the voltage difference between the two electrodes continued to the channel and doesn't demand a reference electrode. The two channels correspond to the horizontal and vertical components of the signal.

The conquering board is continued to a Raspberry Pi, a single-board reckoner adult by the Raspberry Pi company based in the United kingdom. Although its firmware is not open up source, it allows installing a Linux-based distribution keeping the open up prototype in our system. In this instance, we chose to install Raspbian, a Debian-based distribution. The hardware connectedness between the OpenBCI lath and the Raspberry Pi is made using a wireless RFDuino USB dongle. On the software side, we used an open Python library released by OpenBCI. To run this library over the Raspberry Pi, the source code of the mentioned library has been partially modified. It has also been necessary to recompile some third-party libraries and so that they could run on the Raspberry Pi. We decided to ability both the OpenBCI board and the Raspberry Pi via a USB connection to a power bank (xx,000 mAh) to maximize the system autonomy and mobility.

This hardware configuration offers united states of america all the characteristics that we were looking for: information technology has plenty computational power to carry our calculations, it'south small and light, information technology allows us to utilize free and open-source software, and is economical. It should be noted that although we have used the OpenBCI board every bit acquisition system there are some other solutions that fit our needs like the BITalino biosignal acquisition lath. This lath offers an EOG conquering module and an open up environment which includes a Python-based API for connectedness and indicate acquisition over Raspberry Pi.

Information technology should exist mentioned that the data presented in this article have been candy using a conventional laptop instead of the Raspberry Pi, just for the convenience of the experimenters. During the development of the enquiry, several tests were carried out that did non show whatever deviation in the information or the results depending on the platform used.

Nosotros decided to use EOG over other center movement detection techniques like Infrared Reflection Oculography (IROG) [35] or video-based systems [25], every bit the EOG technique does not require the placement of any device that could obstruct the subjects' visual field. Four electrodes were placed in contact with the pare shut to the eyes to record both the horizontal and the vertical components of the eye movements [36,37].

2.2. Experimental Paradigm

Ten salubrious subjects between 24 and 35 years former participated in the written report and gave their informed consent for inclusion. The signal acquisition was performed in ii stages: training and online prediction. For both stages, we asked the subjects to perform four different movements: Upward, Downwardly, Left, and Right. Each motion should start with the subject looking forrad and and then look at one of these four points already mentioned and look once again at the eye. For the training stage, we acquired two blocks of 20 trials, five trials per move. In these blocks, five "beep" tones were presented to the bailiwick at the beginning of each block in 3 southward intervals to indicate the discipline the interval that they had to perform the requested action. After these initial tones, the desired activity was presented via audio, and a "beep" tone was presented as a cue to perform the activeness. The system recorded during the three due south subsequently this tone was presented, and the system presented again another activity to be performed. For some of the subjects, these 2 training blocks were appended in a single information file. The schematic of the training image (offline acquisition) is shown in Figure 2a.

The online classification was performed with a cake of forty trials, 10 per movement, on Discipline i. Subsequently this experiment, we decided to reduce the number of trials per block to 20, 5 per motion, for the convenience of the subject. This online block had the same characteristics every bit the nomenclature blocks except that the v initial tones were not presented, and the actions to be performed were separated past 5 southward interval to have enough time for the prediction tasks. Furthermore, in these blocks, the system recorded only during the 3 south afterward the cue tone was presented. During this phase, nosotros generate two auxiliary files: one with the acquired data and the other containing the activity that the user should perform and the action predicted. Nosotros only considered predicted actions with a prediction probability higher than a sure threshold. For the get-go subject, we fix this threshold every bit 0.7, but after that experiment, we inverse the threshold to 0.five. In this case, the auxiliary file corresponding to subject ane contains the predictions made using 0.7 as a prediction probability threshold. Figure 2b depicts the schematic of the online prediction paradigm.

2.3. Signal Processing

A 2nd-order 20 Hz lowpass Butterworth filer [37] was used to remove the artifacts arising from electrodes or head movements and illumination changes [nineteen,27,38]. A twenty Hz lowpass filter was used because the artifacts, every bit mentioned earlier, appear in the high frequencies [17], and the EOG signal information is contained mainly in low frequencies [30]. The irregularities in the signal later the lowpass filter were removed using a smoothing filter [thirty]. For applying these filters, we used the SciPy library. This library is commonly used and has a big community supporting it.

The last step in pre-processing was to standardize the information. This is done to remove the baseline of EOG signals [27]. The standardization was washed using the following formula:

where i is the sample that we are processing, t corresponds to a unmarried datapoint within a sample,

is the resulting datapoint,

is the data signal value before standardization,

is the mean value of the whole sample and

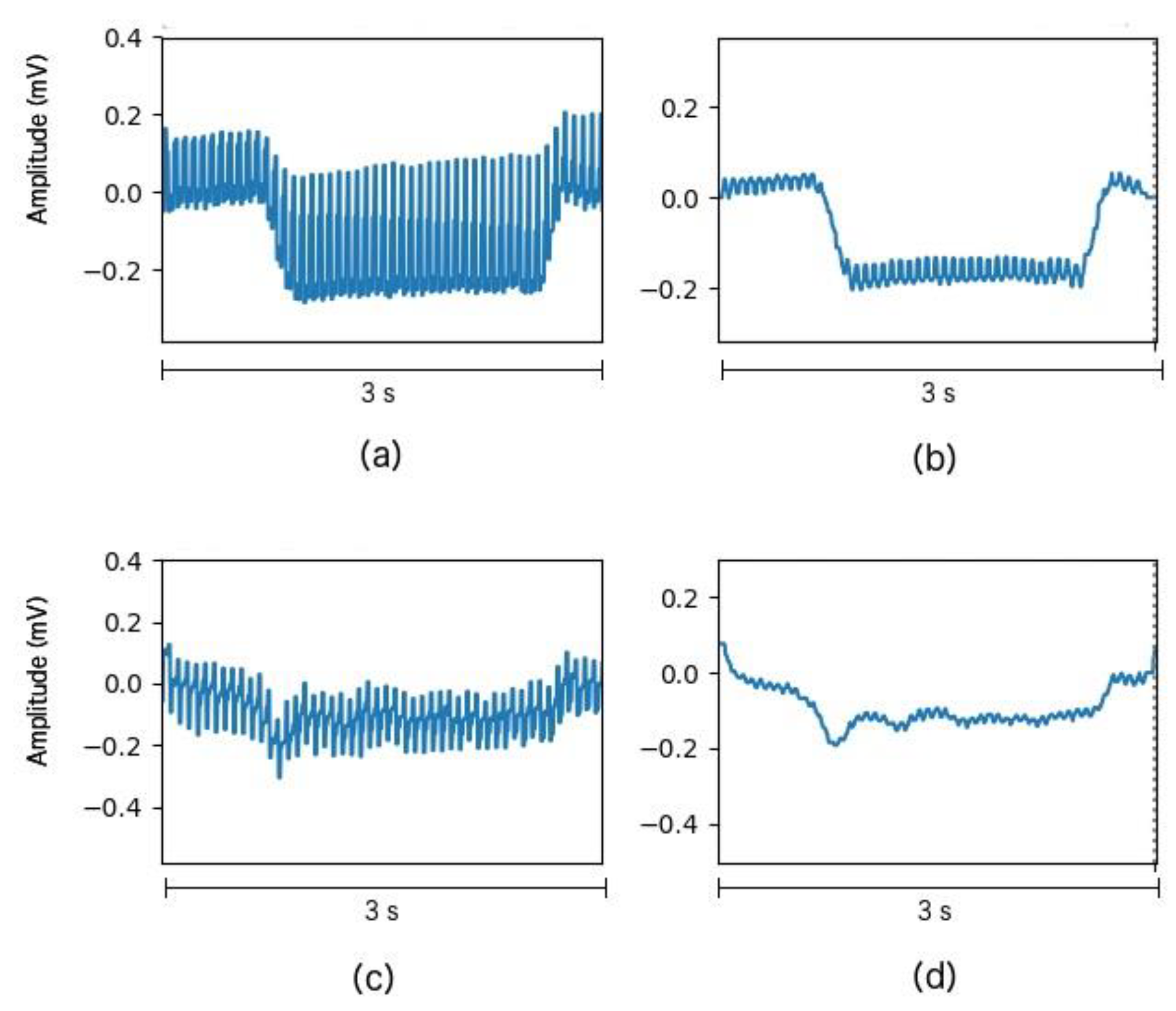

is the standard deviation of the whole sample. An instance of the processed signal tin can be seen in Figure 3, which shows a single Down trial extracted from a classification block of Field of study five.

Figure four depicts the vertical and horizontal component for four different eye movement tasks performed by subject 5.

2.four. Feature Extraction

An essential step in our organization's betoken processing pipeline is feature extraction, which for each sample, calculates specific characteristics that will allow us to maximize the distance between elements in different classes and the similarity between those that belong to the same category. We use a model based on the adding of 3 features for the horizontal and vertical components of our point, i.eastward., 6 total features per sample. The features are the following:

-

Min: The minimum amplitude value during the eye movement.

-

Max: The maximum aamplitude value during the eye motion.

-

Median: The amplitude value during the eye motility that has l% values above every bit beneath.

ii.5. Classification

Once we accept calculated the features of each sample, we create a model using that feature values and its class labels. Even though some biosignal-based HCI utilize other machine learning techniques, such every bit artificial neural networks [29,36] or other statistical techniques [19], most of the HCI present in the literature apply the auto learning technique chosen Back up Vector Car. We take decided to apply SVM because of its simplicity over other techniques, which results in a lower computational cost and splendid performance.

In this study, we accept used the implementation of the SVM of Scikit-Acquire, a free and open up-source Machine Learning Python library. This library has a high reputation in Machine Learning, and it has been widely used. The selected parameters for creating the model are a Radial Basis Function (RBF) as kernel [39], which allows the states to create a model using data points that are non linearly separable [40], and a One vs. I strategy [41], i.eastward., creating a classifier for each pair of move classes. Finally, we have performed v-fold cross-validation [42], splitting the training dataset into v mutually exclusive subsets and also creating 5 models, each i using 1 of these subsets to exam the model and the other four to create it. Our model accuracy is calculated every bit the mean of these v models.

3. Results

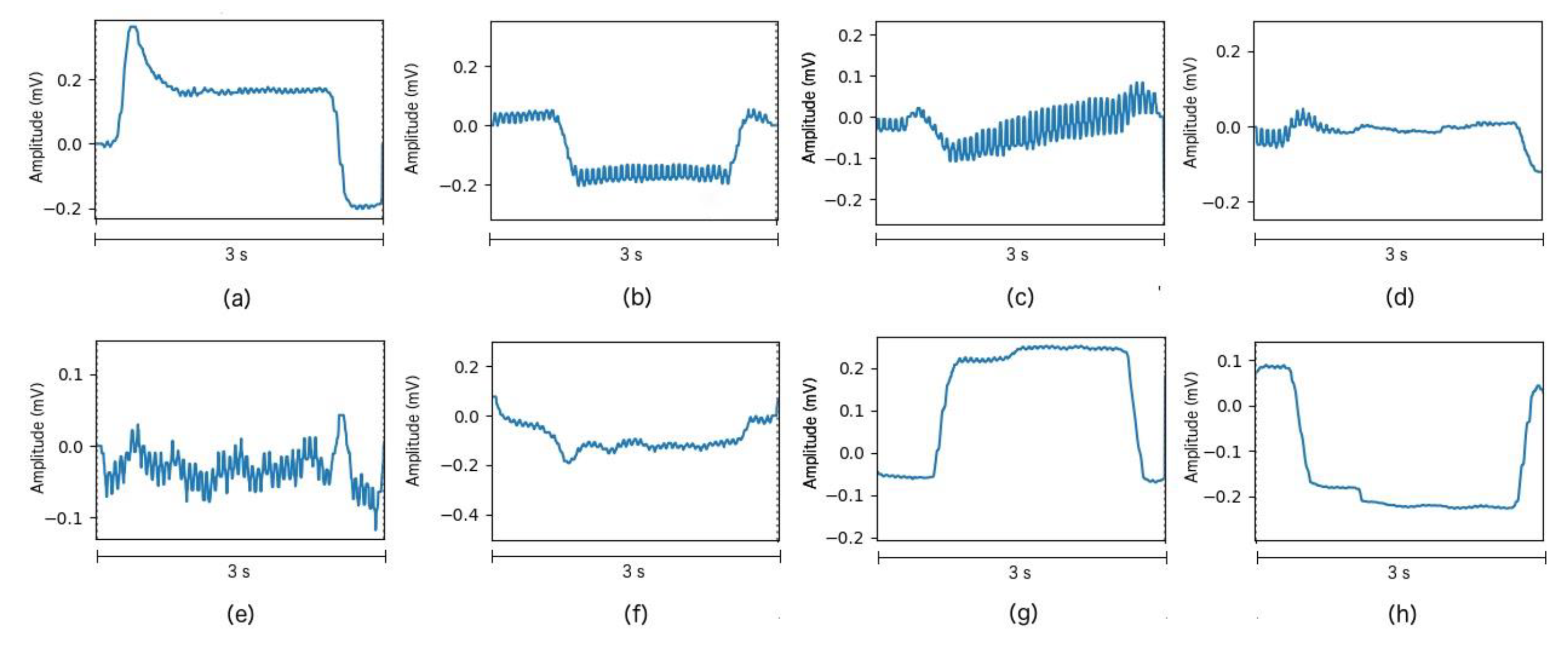

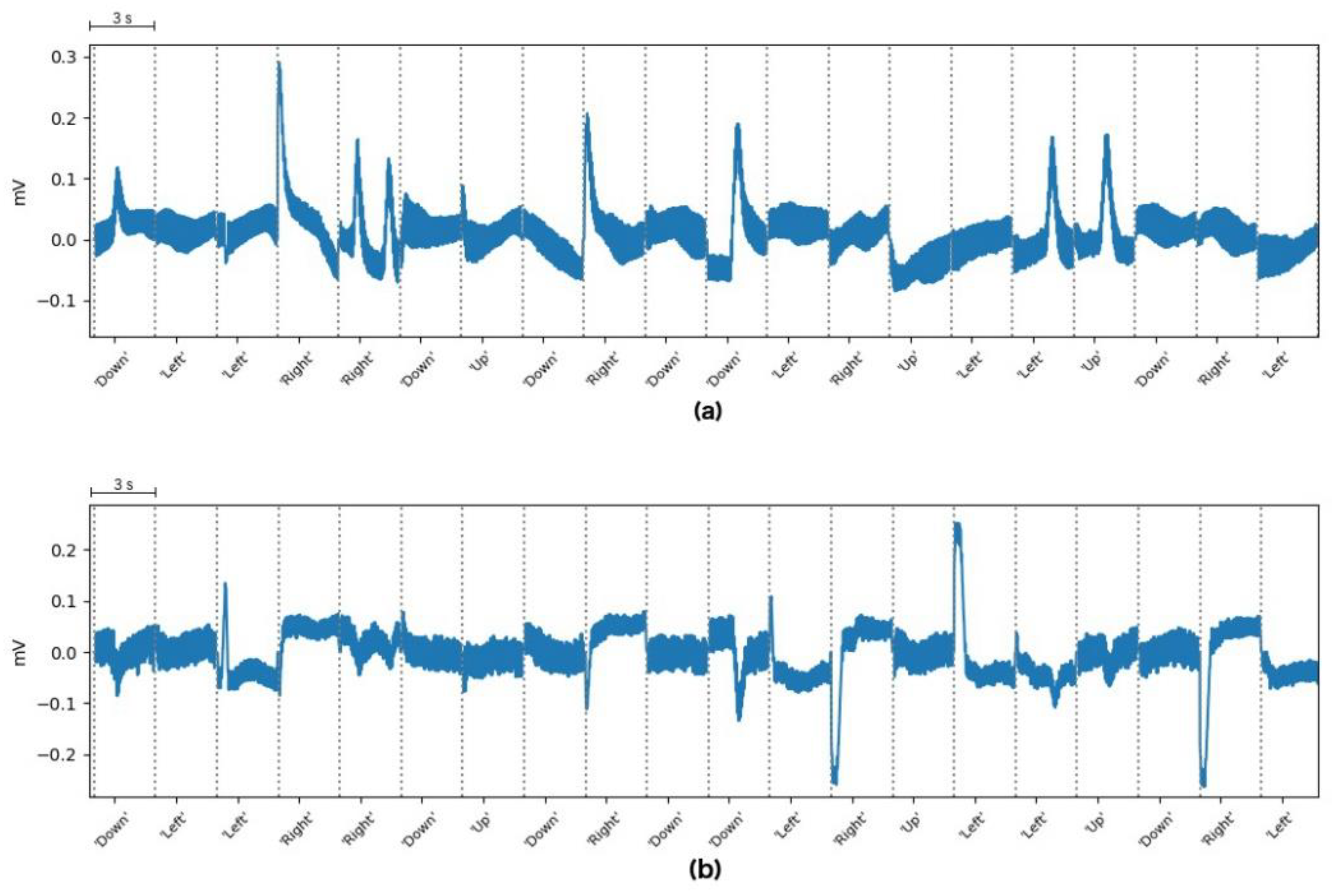

The acquired signal is processed to remove those signal components that comprise no information, resulting in a clearer signal. The data were acquired from 10 salubrious subjects between 24 and 35 years old. The result of point processing can be seen in Figure 3 and Figure four, which shows the single trials of a training block performed by Subject 5. Every bit Figure 3 and Effigy four prove, the result of this step is the i expected. For Bailiwick 8, we found flat or poor-quality signals in the vertical and horizontal component, and so we decided to stop the acquisition and discard these information. Some trials extracted from this discarded block can be seen in Figure 5 which shows no articulate steps or whatsoever other patterns for the four movements. This situation is probably due to an electrode motility, detachment, or misplacement that could not be solved during the experiment.

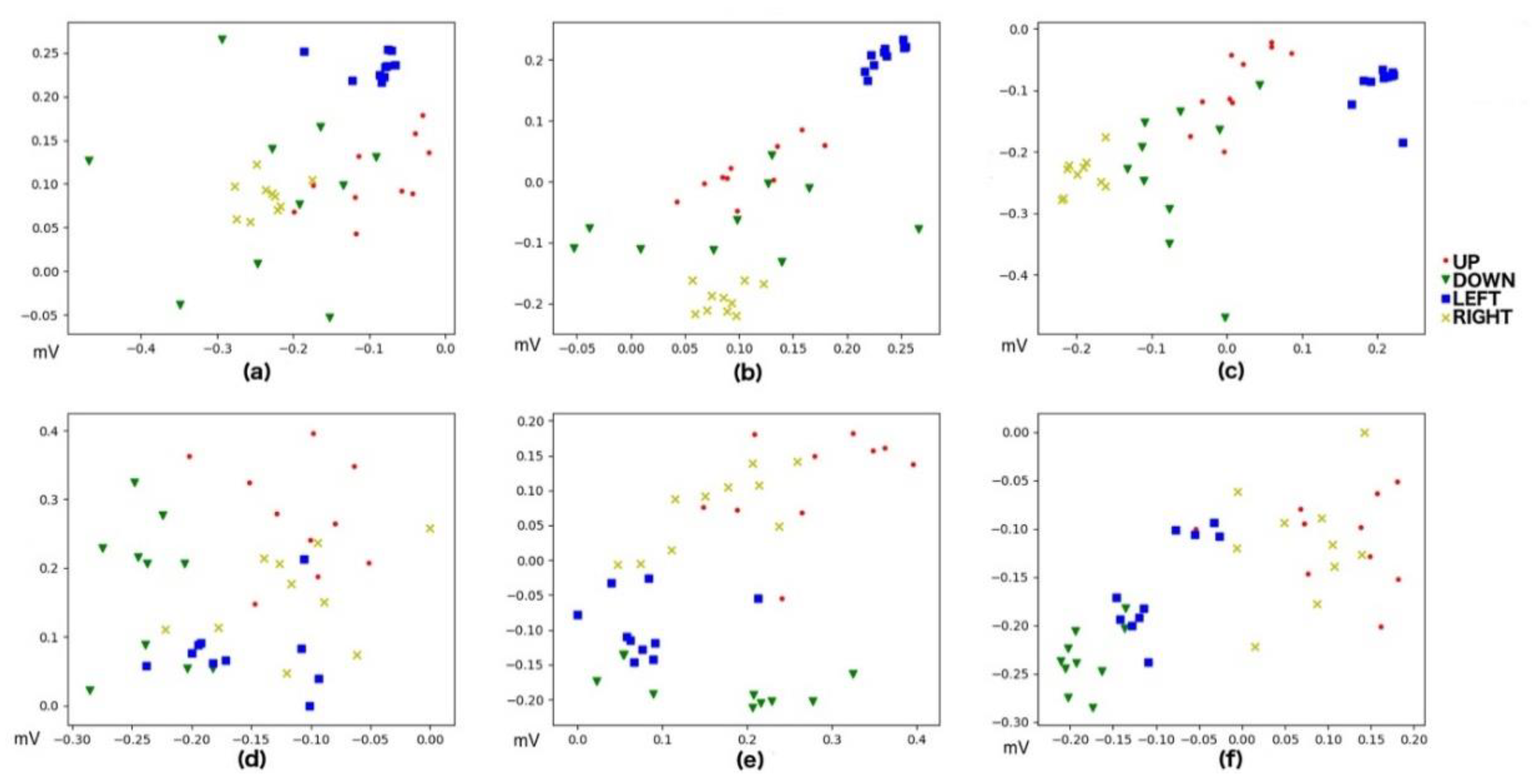

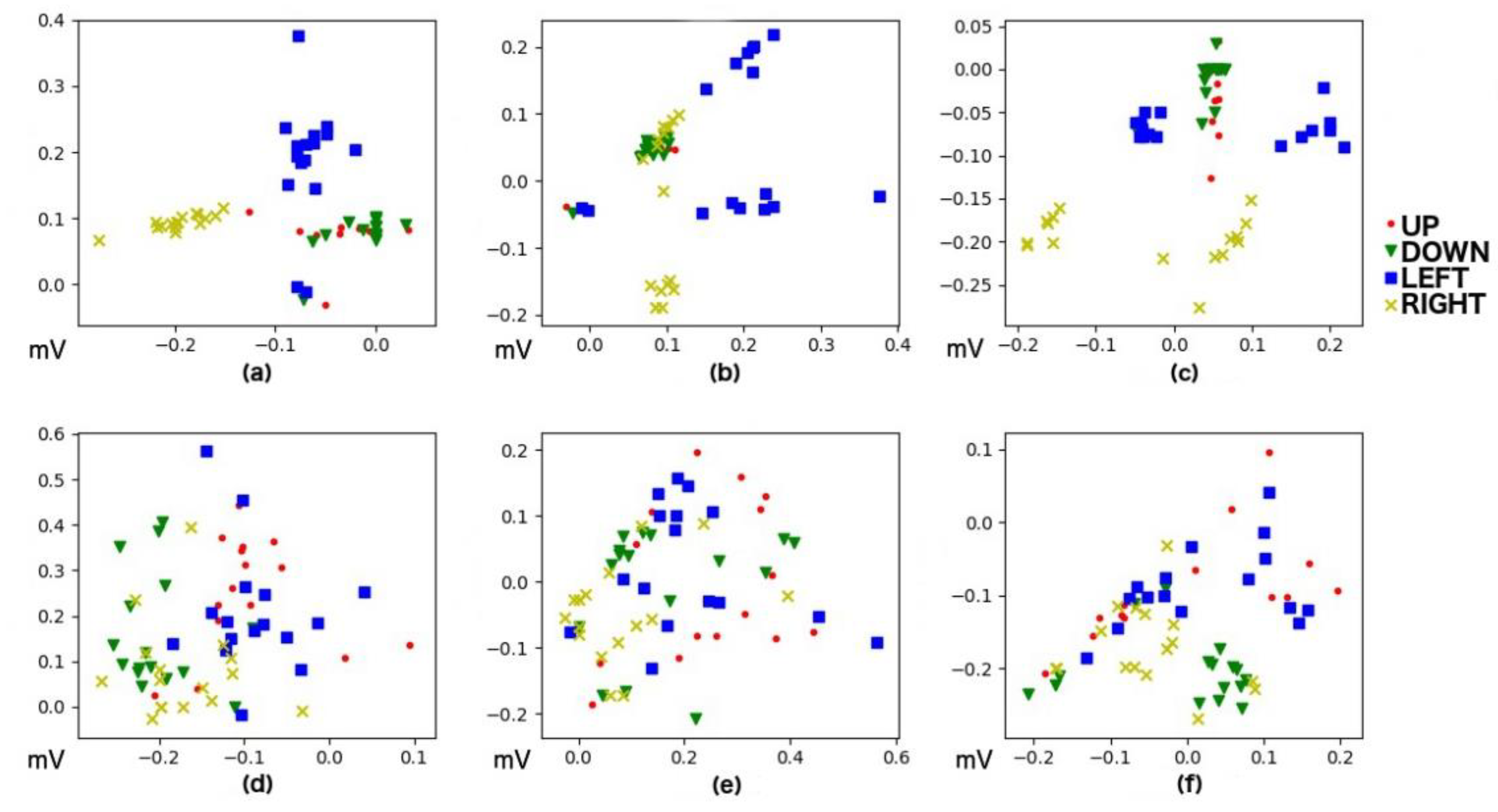

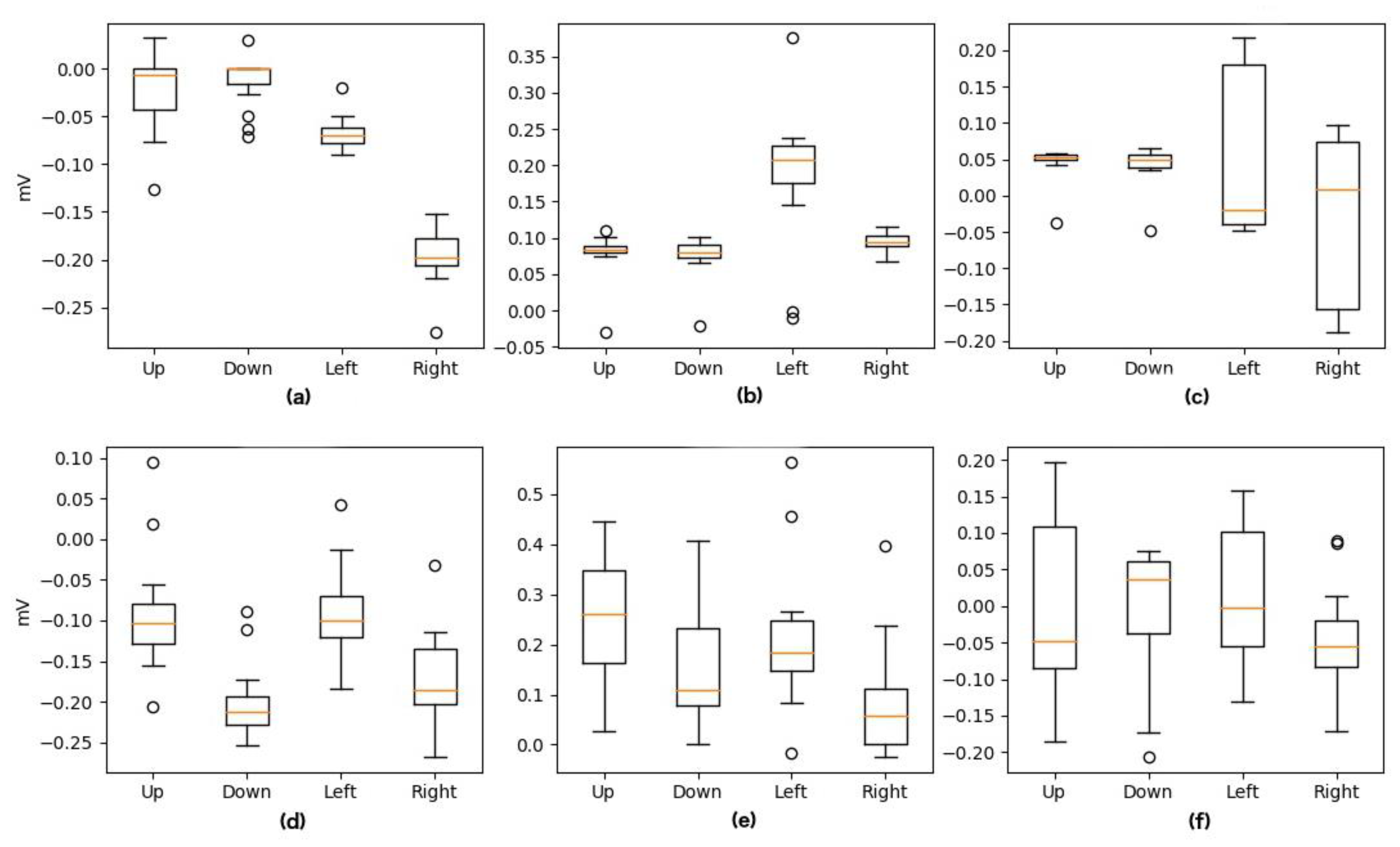

Subsequently artifact removal, characteristic extraction is performed to reduce the dimensionality in input, leading to characteristics that define the signal without information loss. As mentioned above, the features used were Maximum, Minimum, and Median. Information technology should exist noticed that Up and Down movements have relevant information but for the vertical channel of our signal as well as Left and Right movements have this relevant information in the horizontal component. Effigy 6 and Figure 7 present an example of this characteristic extraction process over two blocks of 20 trials, each corresponding to the preparation information of Discipline five, who ended upward with 100% accurateness. Figure 8 and Figure nine present an example of the same feature extraction procedure over two blocks of xx trials performed by Subject six, who ended up with 78.7% accurateness. In these figures, we can appreciate that Subject field five, with 100% accurateness, shows a more than axiomatic divergence in the data values than Subject 6, with 78.7% accuracy. Effigy 8 and Figure ix show some overlapping in the data values, which explains the lower classification accurateness achieved.

The final stride in our pipeline is to build a model and perform an online nomenclature of the subject field's eye movements. Every bit nosotros mentioned before, we build our model using v-fold cross-validation. Table ii shows the model accuracy, the accuracy-related on how good the model has been classifying the preparation data, as the hateful of these five models for each subject. For the prediction accurateness—the accurateness related to the prediction of unseen data—we accept asked the field of study to perform 20 movements per cake (v of each movement), as is explained in Department 2.2. We predicted those movements using the pre-built model and, finally, validated how accurate that prediction was.

As mentioned in Section 2.2., we only consider those predicted actions with a prediction probability higher than 0.v. For subject field 1, the prediction probability threshold was set to 0.7 during the online acquisition, and then the auxiliary file with the predictions corresponds to this threshold, and after experimenting, we re-analyzed the online data using a 0.5 threshold.

We acquired i unmarried online block for subjects 1, 2, v and 7. For subject iii, we acquired 3 online blocks with l%, lxxx%, and 85% accuracy. For subject 6, we acquired two online blocks with 80% and 85% accuracy. For discipline 10, nosotros acquired three online blocks with 55%, 70%, and fourscore% accuracy. It can exist seen that for all subjects, the online accurateness increases with each block acquisition. The accuracy shown in Table 1 corresponds to those online blocks with the highest accuracy for each subject. For subject area iv, the preparation and online data have poor quality (66.seven% accuracy for the model and 20% accurateness for the online prediction). Bailiwick ix had a good model accuracy (95%) but poor-quality signals during online acquisition (l% and 20% accurateness). Mail service-experimental analysis of the data revealed noisy and apartment signals, showing no clear pattern in the betoken acquired from subjects four and 9, similar to the signal acquired from Subject 8 (Please see Effigy 5 for the signal from patient 8). These distortions may have arisen due to the probable electrode movement, detachment, or misplacement. Thus, we decided to discard the information from Subjects 4, 8 and nine.

4. Discussion

Information technology must be clear that in order to make a completely fair comparison between our organisation and the state-of-the-art systems, some extensive testing would exist required. These tests should procedure the information caused in this study with other processing pipelines, run our pipeline over the data acquired in other studies, and adapt our acquisition and processing modules to exist connected to further systems found in the literature. The results obtained after this process would give us a full picture of the differences between our system and those already in identify. Unfortunately, due to lack of time and materials, these tests could not be carried out.

Concentration loss and tiredness are two of the biggest challenges when information technology comes to EOG-based HCI. Equally reported in Barea et al. [43], the number of failures using this kind of organisation increases over time later a specific period of apply. This has been seen during the evolution of this report, where long periods of system employ have led to the appearance of irritation and watery optics. This could exist a problem for subjects who use the system for a long time. In the paper higher up mentioned [43], the researchers deal with this trouble by retraining the organisation.

Another claiming related to our system is the presence of unintentional middle blinks. Eye blinks create artifacts in the EOG signal and, also, during the eye blinks, there is a slight middle move [37]. The trials containing eye blinks can pb to a reduced model accuracy if information technology occurs in the grooming stage or to a trial misclassification if information technology is in the online conquering stage. Pander et al. [44], and Merino et al. [xxx] have proposed methods to detect spontaneous blinks so these trials tin can be rejected. Yathunanthan et al. [6] proposed a organization where centre blinks are automatically discarded.

Our system, like most of the available systems in the literature [19,20,21,29,30,38,43], uses a detached approach, i.due east., the user is not complimentary to perform an action when desired, simply the activeness must be performed at a specific fourth dimension. This affects the agility of the arrangement by increasing the fourth dimension needed to perform an activeness. Barea et al. [38,43] and Arai et al. [25] have proposed systems with a continuous approach where the subject has no fourth dimension restrictions to perform an action.

There are different ways to improve our arrangement in futurity work. First, we could put in identify a machinery to detect and remove unintentional blinks. This would preclude the states discarding training blocks, or could improve the preparation accuracy in the cases in which these unintentional blinks occur. In some cases, a continuous online classification means a considerable advantage. Therefore, information technology would be interesting to add the necessary strategies to perform this type of classification. Finally, past combining our system with farther communication or movement assistance systems, we could check its performance in a consummate HCI loop.

5. Conclusions

Nosotros accept presented an EOG signal classification system that can accomplish a xc% mean accuracy in online classifications. These results are equivalent to other country-of-the-art systems. Our system is built using just open components, showing that information technology is possible to avoid the usage of expensive and proprietary tools in this scope. As intended, the system is small, easy to carry, and has complete autonomy. This is achieved using OpenBCI and Raspberry Pi as hardware, connected to a power banking concern equally a ability source.

Because of the utilize of open up hardware and software technologies, the arrangement is as well open, like shooting fish in a barrel to replicate, and can be improved or modified by someone with the required skills to build a tailored solution. The utilize of open up technologies also helps us to obtain a cheap platform.

Finally, the resulting arrangement is easy to connect to subsequent communication or movement assistance systems.

Writer Contributions

Conceptualization, J.1000.-C., N.B., and U.C.; methodology, J.Grand.-C., M.K.A., A.J.-Yard. and U.C.; software, J.G.-C.; validation, A.J.-1000.; formal analysis, J.Thou-C.; information curation, J.G.-C. and S.W.; writing—Original draft preparation, J.M.-C., S.W., One thousand.K.A. and U.C.; writing—Review and editing, J.Thousand.-C., A.T., N.B., and U.C.; supervision—U.C. All authors take read and agreed to the published version of the manuscript.

Funding

This enquiry was funded by Deutsche Forschungsgemeinschaft (DFG) DFG BI 195/77-1, BMBF (German Ministry building of Education and Research) 16SV7701 CoMiCon, LUMINOUS-H2020-FETOPEN-2014-2015-RIA (686764), and Wyss Centre for Bio and Neuroengineering, Geneva.

Conflicts of Involvement

The authors declare no disharmonize of interest.

References

- Hossain, Z.; Shuvo, Yard.M.H.; Sarker, P. Hardware and Software Implementation of Real Time Electrooculogram (EOG) Acquisition System to Control Computer Cursor with Eyeball Movement. In Proceedings of the fourth International Briefing on Advances in Electrical Applied science (ICAEE), Dhaka, Bangladesh, 28–thirty September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 132–137. [Google Scholar] [CrossRef]

- Usakli, A.B.; Gurkan, S. Design of a Novel Efficient Human–Computer Interface: An Electrooculagram Based Virtual Keyboard. IEEE Trans. Instrum. Meas. 2010, 59, 2099–2108. [Google Scholar] [CrossRef]

- Argentim, L.M.; Castro, Chiliad.C.F.; Tomaz, P.A. Human Interface for a Neuroprothesis Remotely Command. In Proceedings of the 11th International Joint Conference on Biomedical Engineering science Systems and Technologies, Funchal, Madeira, Portugal, 19–21 January 2018; SCITEPRESS—Science and Technology Publications: Setubal, Portugal, 2018; pp. 247–253. [Google Scholar] [CrossRef]

- Rokonuzzaman, Due south.One thousand.; Ferdous, S.Chiliad.; Tuhin, R.A.; Arman, S.I.; Manzar, T.; Hasan, M.Northward. Design of an Democratic Mobile Wheelchair for Disabled Using Electrooculogram (EOG) Signals. In Mechatronics; Jablonski, R., Brezina, T., Eds.; Springer: Berlin/Heidelberg, Frg, 2011; pp. 41–53. [Google Scholar]

- Barea, R.; Boquete, L.; Bergasa, Fifty.Chiliad.; López, East.; Mazo, M. Electro-Oculographic Guidance of a Wheelchair Using Center Movements Codification. Int. J. Robot. Res. 2003, 22, 641–652. [Google Scholar] [CrossRef]

- Yathunanthan, S.; Chandrasena, L.U.R.; Umakanthan, A.; Vasuki, V.; Munasinghe, Southward.R. Decision-making a Wheelchair past Use of EOG Signal. In Proceedings of the 4th International Conference on Data and Automation for Sustainability, Colombo, Sri Lanka, 12–14 Dec 2008; IEEE: Piscataway, NJ, United states, 2008; pp. 283–288. [Google Scholar] [CrossRef]

- Mazo, M.; Rodríguez, F.J.; Lázaro, J.L.; Ureña, J.; García, J.C.; Santiso, E.; Revenga, P.A. Electronic Control of a Wheelchair Guided by Phonation Commands. Command. Eng. Pract. 1995, 3, 665–674. [Google Scholar] [CrossRef]

- Chaudhary, U.; Mrachacz-Kersting, N.; Birbaumer, North. Neuropsychological and Neurophysiological Aspects of Brain-computer-interface (BCI)-control in Paralysis. J. Physiol. 2020, JP278775. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, U.; Birbaumer, N.; Curado, M.R. Encephalon-Machine Interface (BMI) in Paralysis. Ann. Phys. Rehabil. Med. 2015, 58, 9–thirteen. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, U.; Birbaumer, Northward.; Ramos-Murguialday, A. Brain–Computer Interfaces in the Completely Locked-in State and Chronic Stroke. In Progress in Brain Enquiry; Elsevier: Amsterdam, The Netherlands, 2016; Volume 228, pp. 131–161. [Google Scholar] [CrossRef]

- Chaudhary, U.; Birbaumer, North.; Ramos-Murguialday, A. Encephalon–Computer Interfaces for Advice and Rehabilitation. Nat. Rev. Neurol. 2016, 12, 513–525. [Google Scholar] [CrossRef]

- Rosen, J.; Brand, Chiliad.; Fuchs, M.B.; Arcan, Yard. A Myosignal-Based Powered Exoskeleton System. IEEE Trans. Syst. Man Cybern. Part. A Syst. Hum. 2001, 31, 210–222. [Google Scholar] [CrossRef]

- Ferreira, A.; Celeste, W.C.; Cheein, F.A.; Bastos-Filho, T.F.; Sarcinelli-Filho, K.; Carelli, R. Human-Machine Interfaces Based on EMG and EEG Practical to Robotic Systems. J. NeuroEng. Rehabil. 2008, 5, 10. [Google Scholar] [CrossRef]

- Chaudhary, U.; Xia, B.; Silvoni, S.; Cohen, L.G.; Birbaumer, Northward. Brain–Computer Interface–Based Advice in the Completely Locked-In State. PLOS Biol. 2017, 15, e1002593. [Google Scholar] [CrossRef]

- Khalili Ardali, M.; Rana, A.; Purmohammad, Thousand.; Birbaumer, N.; Chaudhary, U. Semantic and BCI-Performance in Completely Paralyzed Patients: Possibility of Language Attrition in Completely Locked in Syndrome. Encephalon Lang. 2019, 194, 93–97. [Google Scholar] [CrossRef]

- Gallegos-Ayala, G.; Furdea, A.; Takano, K.; Ruf, C.A.; Flor, H.; Birbaumer, N. Brain Communication in a Completely Locked-in Patient Using Bedside near-Infrared Spectroscopy. Neurology 2014, 82, 1930–1932. [Google Scholar] [CrossRef] [PubMed]

- Bharadwaj, Southward.; Kumari, B.; Tech, M. Electrooculography: Analysis on Device Control past Signal Processing. Int. J. Adv. Res. Comput. Sci. 2017, 8, 787–790. [Google Scholar]

- Heide, W.; Koenig, E.; Trillenberg, P.; Kömpf, D.; Zee, D.Southward. Electrooculography: Technical Standards and Applications. Electroencephalogr. Clin. Neurophysiol. Suppl. 1999, 52, 223–240. [Google Scholar] [PubMed]

- Lv, Z.; Wang, Y.; Zhang, C.; Gao, 10.; Wu, X. An ICA-Based Spatial Filtering Approach to Saccadic EOG Signal Recognition. Biomed. Signal. Process. Control. 2018, 43, ix–17. [Google Scholar] [CrossRef]

- Wu, S.Fifty.; Liao, Fifty.D.; Lu, S.W.; Jiang, West.L.; Chen, S.A.; Lin, C.T. Decision-making a Homo–Estimator Interface System with a Novel Classification Method That Uses Electrooculography Signals. IEEE Trans. Biomed. Eng. 2013, 60, 2133–2141. [Google Scholar] [CrossRef]

- Huang, Q.; He, S.; Wang, Q.; Gu, Z.; Peng, North.; Li, Yard.; Zhang, Y.; Shao, One thousand.; Li, Y. An EOG-Based Human–Machine Interface for Wheelchair Control. IEEE Trans. Biomed. Eng. 2018, 65, 2023–2032. [Google Scholar] [CrossRef]

- Larson, A.; Herrera, J.; George, Yard.; Matthews, A. Electrooculography Based Electronic Communication Device for Individuals with ALS. In Proceedings of the IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, United states of america, xiii–15 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. ane–5. [Google Scholar] [CrossRef]

- Iáñez, Eastward.; Azorin, J.Chiliad.; Perez-Vidal, C. Using Eye Movement to Command a Reckoner: A Design for a Lightweight Electro-Oculogram Electrode Array and Calculator Interface. PLoS Ane 2013, eight, e67099. [Google Scholar] [CrossRef]

- Kherlopian, A.; Sajda, P.; Gerrein, J.; Yue, G.; Kim, K.; Kim, J.Westward.; Sukumaran, M. Electrooculogram Based Organisation for Computer Control Using a Multiple Feature Nomenclature Model. four. In Proceedings of the 28th IEEE EMBS Almanac International Conference, New York, NY, U.s., 30 August–three September 2006. [Google Scholar]

- Arai, K.; Mardiyanto, R. A Prototype of ElectricWheelchair Controlled by Eye-Merely for Paralyzed User. J. Robot. Mechatron. 2011, 23, 66–74. [Google Scholar] [CrossRef]

- Heo, J.; Yoon, H.; Park, Thou. A Novel Article of clothing Forehead EOG Measurement Organisation for Human being Computer Interfaces. Sensors 2017, 17, 1485. [Google Scholar] [CrossRef]

- Qi, L.J.; Alias, N. Comparison of ANN and SVM for Classification of Middle Movements in EOG Signals. J. Phys. Conf. Ser. 2018, 971, 012012. [Google Scholar] [CrossRef]

- Guo, Ten.; Pei, Due west.; Wang, Y.; Chen, Y.; Zhang, H.; Wu, X.; Yang, X.; Chen, H.; Liu, Y.; Liu, R. A Human-Auto Interface Based on Single Channel EOG and Patchable Sensor. Biomed. Point. Process. Command. 2016, 30, 98–105. [Google Scholar] [CrossRef]

- Erkaymaz, H.; Ozer, M.; Orak, İ.M. Detection of Directional Eye Movements Based on the Electrooculogram Signals through an Bogus Neural Network. Chaos Solitons Fractals 2015, 77, 225–229. [Google Scholar] [CrossRef]

- Merino, Yard.; Rivera, O.; Gomez, I.; Molina, A.; Dorronzoro, East. A Method of EOG Signal Processing to Detect the Direction of Middle Movements. In Proceedings of the Commencement International Conference on Sensor Device Technologies and Applications, Venice, Italy, 18–25 July 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 100–105. [Google Scholar] [CrossRef]

- Aungsakul, Southward.; Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Evaluating Feature Extraction Methods of Electrooculography (EOG) Indicate for Human-Computer Interface. Procedia Eng. 2012, 32, 246–252. [Google Scholar] [CrossRef]

- Phukpattaranont, P.; Aungsakul, Southward.; Phinyomark, A.; Limsakul, C. Efficient Feature for Nomenclature of Eye Movements Using Electrooculography Signals. Therm. Sci. 2016, 20, 563–572. [Google Scholar] [CrossRef]

- Boser, B.; Guyon, I.; Vapnik, V. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; Association for Calculating Machinery: New York, NY, Us, 1992; pp. 144–152. [Google Scholar]

- Vapnik, V.; Golowich, Southward.E.; Smola, A.J. Support Vector Method for Function Approximation, Regression Interpretation and Bespeak Processing. In Advances in Neural Information Processing Systems; Mozer, Grand.C., Jordan, Yard.I., Petsche, T., Eds.; MIT Press: Cambridge, MA, United states, 1997. [Google Scholar]

- Hess, C.W.; Muri, R.; Meienberg, O. Recording of Horizontal Saccadic Heart Movements: Methodological Comparison Between Electro-Oculography and Infrared Reflection Oculography. Neuro Ophthalmol. 1986, half-dozen, 189–197. [Google Scholar] [CrossRef]

- Barea, R.; Boquete, L.; Ortega, S.; López, E.; Rodríguez-Ascariz, J.Yard. EOG-Based Heart Movements Codification for Human being Calculator Interaction. Skilful Syst. Appl. 2012, 39, 2677–2683. [Google Scholar] [CrossRef]

- Chang, West.D. Electrooculograms for Human being–Calculator Interaction: A Review. Sensors 2019, 19, 2690. [Google Scholar] [CrossRef]

- Barea, R.; Boquete, L.; Mazo, M.; Lopez, E. System for Assisted Mobility Using Eye Movements Based on Electrooculography. IEEE Trans. Neural Syst. Rehabil. Eng. 2002, 10, 209–218. [Google Scholar] [CrossRef]

- Amari, S.; Wu, S. Improving Support Vector Automobile Classifiers past Modifying Kernel Functions. Neural Netw. 1999, 12, 783–789. [Google Scholar] [CrossRef]

- Ben-Hur, A.; Weston, J. A User's Guide to Support Vector Machines. In Data Mining Techniques for the Life Sciences; Carugo, O., Eisenhaber, F., Eds.; Humana Press: Totowa, NJ, USA, 2010; Volume 609, pp. 223–239. [Google Scholar] [CrossRef]

- Hsu, C.West.; Lin, C.J. A Comparison of Methods for Multiclass Back up Vector Machines. IEEE Trans. Neural Netw. 2002, 13, 415–425. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Interpretation and Model Selection. Int. Jt. Conf. Artif. Intell. 1995, fourteen, 8. [Google Scholar]

- Barea, R.; Boquete, L.; Rodriguez-Ascariz, J.M.; Ortega, South.; López, E. Sensory System for Implementing a Man-Computer Interface Based on Electrooculography. Sensors 2010, 11, 310–328. [Google Scholar] [CrossRef] [PubMed]

- Brownnose, T.; Przybyła, T.; Czabanski, R. An Application of Detection Function for the Heart Blinking Detection. In Proceedings of the Conference on Human System Interactions, Krakow, Poland, 25–27 May 2008; IEEE: Piscataway, NJ, The states, 2008; pp. 287–291. [Google Scholar] [CrossRef]

Figure 1. Block diagram with system connection.

Figure 1. Cake diagram with system connection.

Figure two. Acquisition image. (a) Offline acquisition. (b) Online conquering.

Figure 2. Acquisition paradigm. (a) Offline conquering. (b) Online acquisition.

Effigy three. Downwardly motility instance taken from Subject area 5. The x-axis depicts fourth dimension (in seconds), and Y-axis represents the signal amplitude (in millivolts). (a) Unfiltered vertical component. (b) Filtered vertical component. (c) Unfiltered horizontal component. (d) Filtered horizontal component.

Figure 3. Down movement case taken from Bailiwick v. The x-axis depicts fourth dimension (in seconds), and Y-axis represents the indicate amplitude (in millivolts). (a) Unfiltered vertical component. (b) Filtered vertical component. (c) Unfiltered horizontal component. (d) Filtered horizontal component.

Figure four. Processed signals examples taken from Field of study five. The x-axis depicts time (in seconds), and Y-axis represents the signal aamplitude (in millivolts). (a) Vertical component for Up motion. (b) Vertical component for Down Motility. (c) Vertical component for Left movement. (d) Vertical component for the Correct motility. (e) Horizontal component for Upwardly movement. (f) Horizontal component for Down movement. (g) Horizontal component for Left movement. (h) Horizontal component for Right motion.

Effigy 4. Processed signals examples taken from Discipline 5. The x-centrality depicts time (in seconds), and Y-axis represents the signal amplitude (in millivolts). (a) Vertical component for Up motion. (b) Vertical component for Downwards Motility. (c) Vertical component for Left movement. (d) Vertical component for the Right movement. (e) Horizontal component for Upwards motion. (f) Horizontal component for Down movement. (g) Horizontal component for Left movement. (h) Horizontal component for Correct move.

Effigy v. Instance trials taken from Subject 8. The 10-centrality depicts fourth dimension (each trial is 3 s), and Y-axis represents the signal amplitude (in millivolts). (a) Vertical component. (b) Horizontal component.

Figure 5. Example trials taken from Discipline eight. The x-centrality depicts time (each trial is 3 s), and Y-axis represents the signal amplitude (in millivolts). (a) Vertical component. (b) Horizontal component.

Effigy 6. Values after Feature Extraction for Up, Down, Left, and Right movements performed by Subject 5 (100% model accuracy). Both X-axis and Y-centrality depict point values (in millivolts). (a) Horizontal Min vs. Max. (b) Horizontal Max vs. Median. (c) Horizontal Median vs. Min. (d) Vertical Min vs. Max. (e) Vertical Max vs. Median. (f) Median vs. Min.

Figure six. Values after Feature Extraction for Upward, Down, Left, and Right movements performed past Subject 5 (100% model accurateness). Both X-axis and Y-centrality describe bespeak values (in millivolts). (a) Horizontal Min vs. Max. (b) Horizontal Max vs. Median. (c) Horizontal Median vs. Min. (d) Vertical Min vs. Max. (e) Vertical Max vs. Median. (f) Median vs. Min.

Effigy 7. Values subsequently Characteristic Extraction for Up, Downward, Left, and Right Movements performed by Bailiwick 5 (100% model accuracy). The x-axis depicts movement class, and Y-axis depicts indicate amplitude (in millivolts). (a) Horizontal Min. (b) Horizontal Max. (c) Horizontal Median. (d) Vertical Min. (e) Vertical Max. (f) Vertical Median.

Figure 7. Values afterward Feature Extraction for Upwardly, Downwards, Left, and Correct Movements performed by Subject 5 (100% model accuracy). The x-axis depicts motion class, and Y-axis depicts signal amplitude (in millivolts). (a) Horizontal Min. (b) Horizontal Max. (c) Horizontal Median. (d) Vertical Min. (e) Vertical Max. (f) Vertical Median.

Figure 8. Values after Feature Extraction for Upward, Down, Left, and Right movements performed by Subject vi (78.seven% model accurateness). Both Ten-axis and Y-axis depict indicate values (in millivolts). (a) Horizontal Min vs. Max. (b) Horizontal Max vs. Median. (c) Horizontal Median vs. Min. (d) Vertical Min vs. Max. (e) Vertical Max vs. Median. (f) Median vs. Min.

Figure 8. Values subsequently Feature Extraction for Up, Down, Left, and Right movements performed by Subject 6 (78.seven% model accuracy). Both Ten-axis and Y-centrality depict point values (in millivolts). (a) Horizontal Min vs. Max. (b) Horizontal Max vs. Median. (c) Horizontal Median vs. Min. (d) Vertical Min vs. Max. (e) Vertical Max vs. Median. (f) Median vs. Min.

Figure 9. Values afterwards Characteristic Extraction for Up, Down, Left, and Right Movements performed past Subject 6 (78.7% model accuracy). The x-axis depicts move grade, and Y-centrality depicts betoken amplitude (in millivolts). (a) Horizontal Min. (b) Horizontal Max. (c) Horizontal Median. (d) Vertical Min. (due east) Vertical Max. (f) Vertical Median.

Figure 9. Values after Feature Extraction for Up, Down, Left, and Right Movements performed past Subject 6 (78.7% model accuracy). The x-centrality depicts movement class, and Y-axis depicts signal amplitude (in millivolts). (a) Horizontal Min. (b) Horizontal Max. (c) Horizontal Median. (d) Vertical Min. (e) Vertical Max. (f) Vertical Median.

Tabular array 1. Comparison of results between unlike studies.

Tabular array i. Comparison of results between different studies.

| Written report | Movements | Acquisition | Processing | Method | Accuracy |

|---|---|---|---|---|---|

| Qi et al. [27] | Upwards, Down, Left, Right | Commercial | - | Offline | seventy% |

| Guo et al. [28] | Up, Down, Blink | Commercial | Laptop | Online | 84% |

| Kherlopian et al. [24] | Left, Right, Heart | Commercial | Laptop | Online | 80% |

| Wu et al. [20] | Upward, Down, Left, Correct, Up-Right, Up-Left, Downwardly-Right, Down-Left | Self-designed | Laptop | Online | 88.59% |

| Heo et al. [26] | Up, Down, Left, Right, Blink | Self-designed | Self-designed + Laptop | Online | 91.25% |

| Heo et al. [26] | Double Blink | Self-designed | Self-designed + Laptop | Online | 95.12% |

| Erkaymaz et al. [29] | Upwardly, Down, Left, Right, Glimmer, Tic | Commercial | Laptop | Offline | 93.82% |

| Merino et al. [27] | Up, Down, Left, Right | Commercial | Laptop | Online | 94.11% |

| Huang et al. [21] | Blink | Self-designed | Laptop | Online | 96.7% |

| Lv et al. [nineteen] | Up, Down, Left, Right | Commercial | Laptop | Offline | 99% |

| Yathunanthan et al. [half dozen] | Upwardly, Downward, Left, Right | Self-designed | Cocky-designed | Online | 99% |

Table 2. Model and Prediction Accuracies.

Table 2. Model and Prediction Accuracies.

| Subject field | Model Mean Accurateness | Online Accuracy |

|---|---|---|

| Discipline 1 | 100% | ninety% |

| Subject ii | 100% | 95% |

| Bailiwick 3 | 92.5% | 85% |

| Subject 5 | 100% | 100% |

| Subject 6 | 78.7% | 85% |

| Subject 7 | 97.5% | 95% |

| Subject field 10 | 90.8% | 80% |

| MEAN | 94.21% | 90% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open admission commodity distributed under the terms and weather condition of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

0 Response to "Hardware and Software Implementation of Real Time Electrooculogram"

Post a Comment